by Isaac Greszes, Eleos

Purpose-Built AI for Care at Home

How Care at Home leaders can move beyond AI pilots

Care at Home is increasingly turning to AI to address documentation burden, clinician burnout, and regulatory pressure. While AI has the potential to address these issues and more, practical results remain uneven, leaving agencies with a lot of experimentation, but little clarity on actual value.

Evaluating AI solutions should focus on real-world outcomes, how the solution fits into your existing workflow, whether the software is scalable, and how it handles changing regulations. You should also look for AI solutions that are built for care at home (purpose-built). This series of articles will help you make informed, risk-aware decisions about AI adoption.

AI is Coming Fast

Home health and hospice leaders are navigating a difficult balance: persistent workforce shortages, rising provider burnout, expanding documentation requirements, and increasing regulatory scrutiny — all within thin operating margins.

At the same time, AI has moved quickly from experimental to strategic. Many organizations are now evaluating AI not just for productivity, but for operational and administrative efficiency, clinician experience, compliance readiness, and financial performance.

And the stakes are high

Early results across the market have been inconsistent. Some organizations report meaningful reductions in administrative burden and a clear return on investment. However, others struggle to find value after adoption. The difference often lies not in whether AI was adopted, but how it was designed, supported, and governed.

The pilot problem

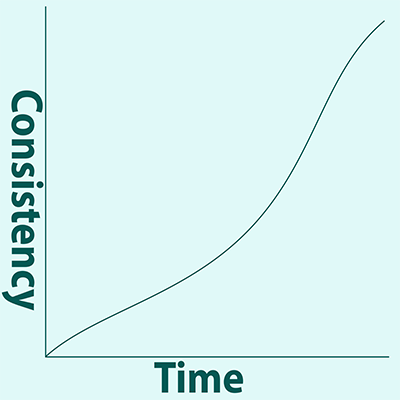

As AI adoption accelerates, many organizations find themselves caught in extended pilot cycles — testing multiple tools without committing to the operational changes required for scale. While pilots can validate technical feasibility, they rarely provide the consistency or measurement discipline needed to demonstrate sustained ROI in regulated care at home environments.

Quality over Quantity

Why the right evidence matters

In today’s AI market, product demonstrations are easy to produce. Documented outcomes are not.

Executive leaders should expect vendors to demonstrate real-world impact, supported by customer data, third-party validation, or peer-reviewed research. Credible AI partners should be able to explain how their results translate to care at home — and where limitations exist. The challenge is not the lack of information from pilots, but the lack of evidence those pilots results can be reproduced, measured, and sustained, in a care at home setting.

Objective Evidence that Matters

When evaluating AI platforms, leaders should look for evidence related to:

- Documentation efficiency, such as reduced time per visit or faster note completion

- Operational ROI, including quicker billing readiness or reduced rework

- Compliance support, such as documentation completeness or audit preparedness

- Provider experience, including reduced perceived administrative burden

- Care outcomes, including patient engagement and satisfaction

AI solutions can impact efficiency and burnout. But, these outcomes are highly dependent on whether the solution was built for care at home, the quality of implementation, how easily it will integrate into your workflow, and governance. If a vendor cannot explain how results were achieved and whether they are reliable and repeatable outside the pilot, the vendor and the solution should be evaluated carefully.

General Purpose AI

And inconsistent results

Many AI tools marketed to healthcare organizations rely on general-purpose language models designed for tasks like summarization, chat, or content generation — not for producing structured clinical notes aligned to regulatory and reimbursement requirements.

Home health and hospice documentation often includes:

- Clinical observations made in non-clinical environments

- Structured requirements tied to reimbursement and regulation

- Risk-sensitive language related to safety, decline, or end-of-life care

- Significant variation across disciplines, visit types, and patient contexts

Where generic AI breaks down

In these settings, AI tools based on general-purpose language models introduce risks related to accuracy, hallucinations, bias, privacy, and workflow fit — because they were not designed to operate within structured clinical, regulatory, and reimbursement frameworks.

In practice, organizations report that the additional oversight required to validate or correct AI-generated output can reduce — or even negate — anticipated efficiency gains, limiting adoption and ROI. As a result, organizations often remain stuck in pilot mode — investing time and effort in validation without achieving the scale or consistency required for meaningful return.

The right question

When evaluating an AI solution, the right question is not whether the AI tool can record a conversation and translate it into notes or whether the tool can reduce documentation, but whether it can consistently support high-quality clinical documentation at scale without increading burden or creating compliance risks.

Purpose-Built AI

What it means and why it drives operational impact

In care at home environments, purpose-built AI should be evaluated less as a point solution and more as foundational infrastructure — one designed to support regulated clinical workflows consistently over time.

Many AI platforms label themselves as “purpose-built,” but leaders must look past marketing language to truly scrutinize the way the technology is designed and deployed. In regulated clinical environments, purpose-built AI typically incorporates:

- Domain-specific clinical intelligence, informed by real documentation patterns

- Provider involvement in defining structure, logic, and validation criteria

- Structured outputs aligned to required note components, in addition to free-text summaries

- Grounding mechanisms that reduce fabricated or misattributed content

- Privacy-conscious data handling, with explicit limits on data retention and reuse

Research consistently shows that providers prefer AI systems that function as collaborative tools — preserving human oversight while reducing administrative load — rather than fully automated systems that completely bypass clinical judgment. These characteristics directly affect whether AI improves documentation time, supports compliance workflows, and earns provider trust — all prerequisites for driving ROI.

These design choices are what allow AI systems to move beyond experimentation and begin delivering durable efficiency, compliance support, and clinician adoption at scale.

# # #

This article is part 1 in a 4-part series. Come back next week for “Scalability, Security, and Governance.”

About Eleos

At Eleos, we believe the path to better healthcare is paved with provider-focused technology. Our purpose-built AI platform streamlines documentation, simplifies compliance and surfaces deep care insights to drive better client outcomes. Created using real-world care sessions and fine-tuned by our in-house clinical experts, our AI tools are scientifically proven to reduce documentation time by more than 70% and boost client engagement by 2x. With Eleos, providers are free to focus less on administrative tasks and more on what got them into this field in the first place: caring for their clients.

©2026 by The Rowan Report, Peoria, AZ. All rights reserved. This article originally appeared in The Rowan Report. One copy may be printed for personal use: further reproduction by permission only. editor@therowanreport.com